Introduction

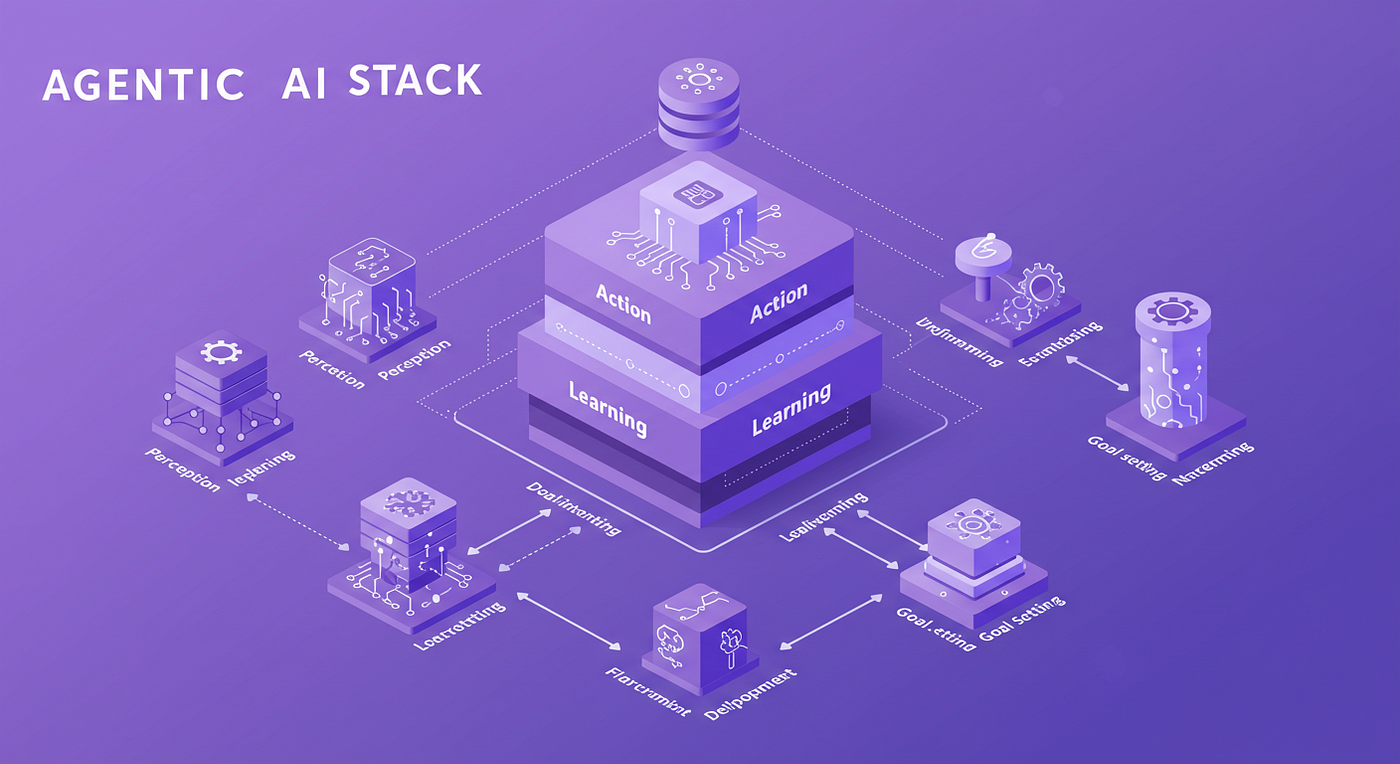

Agentic AI is one of 2025’s biggest shifts in artificial intelligence. Unlike traditional AI that passively responds to prompts, Agentic AI systems can act with autonomy — setting goals, making decisions, and executing actions with minimal human input.

But what actually powers these systems? Let’s dive into the tech stack behind Agentic AI.

1. Core Frameworks for Agentic AI

- LangChain

- One of the most popular frameworks for building AI agents.

- Helps chain prompts, manage context, and integrate external tools (databases, APIs).

- Developers use LangChain to give LLMs “memory” and workflows.

- AutoGPT

- An open-source experiment that showed how GPT-based models can loop tasks, set subgoals, and work semi-independently.

- Still experimental, but inspired dozens of spinoffs for autonomous agents.

- CrewAI / AgentVerse

- Newer platforms that allow multi-agent collaboration — multiple AIs working together, simulating a team environment.

2. Large Language Models (LLMs)

Agentic AI runs on top of powerful LLMs. Some leading options:

- OpenAI’s GPT-4/5 → General-purpose reasoning and task automation.

- Anthropic’s Claude 3.5 → Known for safety, reasoning depth, and handling long contexts.

- Mistral & LLaMA 3 → Open-source alternatives that make local agentic systems possible.

3. Memory & Knowledge Management

To act “intelligently,” agents need memory:

- Vector Databases (Pinecone, Weaviate, FAISS) → Store embeddings for long-term recall.

- Knowledge Graphs (Neo4j, ArangoDB) → Map relationships between concepts, great for reasoning.

- ChromaDB → Lightweight, dev-friendly option for context storage.

4. Tool Use & APIs

Agents need hands-on capabilities, not just chat. That’s where tooling integration comes in:

- OpenAI’s Function Calling → Lets LLMs call APIs directly.

- LangChain Tools & Plugins → Prebuilt connectors for search, math, coding, etc.

- Zapier & Make.com → Let agents interact with SaaS apps without writing custom integrations.

5. Orchestration & Deployment

Running multiple autonomous agents requires orchestration:

- Ray / Dask → For distributed, parallel task execution.

- Prefect / Airflow → Workflow automation with monitoring.

- Docker + Kubernetes → Containerization and scaling of agent systems.

6. Guardrails & Safety

No agent stack is complete without safety nets:

- Guardrails AI → Enforce structured outputs and policies.

- Human-in-the-Loop APIs → Insert checkpoints before high-risk actions.

- Ethical AI Frameworks → Tools for bias detection and transparency logging.

Conclusion

The tech stack behind Agentic AI is evolving fast. At its core are frameworks like LangChain, powerful LLMs like GPT-4/Claude, vector databases for memory, and orchestration tools for scaling. Together, they transform passive AI into active, autonomous systems ready to collaborate with humans — and maybe even with each other.

🚀 Agentic AI isn’t just about smarter models — it’s about giving AI the tools, memory, and autonomy to act.